PYTORCH CROSS ENTROPY LOSS CODE

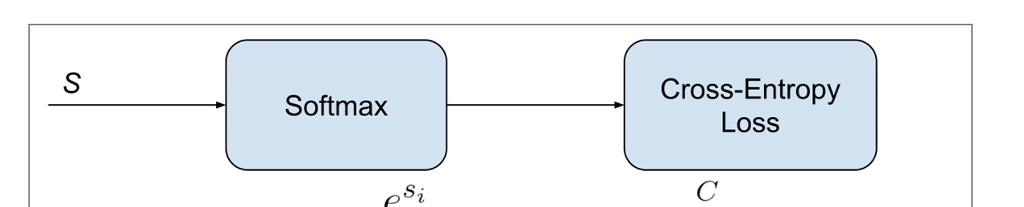

Target = torch.randint(n_classes, size=(batch_size,), dtype=torch.long)Īfter running the above code we get the following output in which we can see that the cross-entropy value after implementation is printed on the screen.Ĭross entropy loss PyTorch implementation. f.cross_entropy(X, target) is used to calculate the cross entropy.target = torch.randint(n_classes, size=(batch_size,), dtype=torch.long) is used as an target variable.X = torch.randn(batch_size, n_classes) is used to get the random values.In the following code, we will import some libraries from calculating cross-entropy loss. Here we can calculate the difference between input and output variables by implementation.As we know cross-entropy loss PyTorch is used to calculate the difference between the input and output variable.In this section, we will learn about cross-entropy loss PyTorch implementation in python. Read: Pandas in Python Cross entropy loss PyTorch implementation In the following output, we can see that the cross-entropy loss example value is printed on the screen. Output = crossentropy_loss(input, target) Print ("CE error is: " + str(crossentropy_value)) Mean_bce_loss = total_bce_loss / num_of_samplesĬrossentropy_value = CrossEntropy(y_pred, y_true) Total_bce_loss = num.sum(-y_true * num.log(y_pred) - (1 - y_true) * num.log(1 - y_pred)) output = crossentropy_loss(input, target) is used to calculate the ouput of the cross-entropy loss.sigmoid = torch.nn.Sigmoid() is used to ensuring the input between 0 and 1.print (“CE error is: ” + str(crossentropy_value)) is used to print the cross entropy value.mean_bce_loss = total_bce_loss / num_of_samples is used to calculate the mean of cross entropy loss.total_bce_loss = num.sum(-y_true * num.log(y_pred) – (1 – y_true) * num.log(1 – y_pred)) is calculate the cross entropy loss.In the following code, we will import some libraries from which we can calculate the cross-entropy between two variables. In this section, we will learn about the cross-entropy loss PyTorch with the help of an example.Ĭross entropy is defined as a process that is used to calculate the difference between the probability distribution of the given set of variables. Target = torch.tensor(, dtype=torch.long)Īfter running the above code, we get the following output in which we can see that the cross-entropy loss value is printed on the screen.Īlso, check: Machine Learning using Python Cross entropy loss PyTorch example Input = torch.tensor(],dtype=torch.float) target = torch.tensor(, dtype=torch.long) is used as an target variable.input = torch.tensor(],dtype=torch.float) is used as an input variable.In the following code, we will import some libraries to calculate the cross-entropy between the variables. The criterion are to calculate the cross-entropy between the input variables and the target variables.Cross entropy loss is mainly used for the classification problem in machine learning.In this section, we will learn about cross-entropy loss PyTorch in python.

Cross entropy loss PyTorch implementation.

0 kommentar(er)

0 kommentar(er)